The market for AI-powered video generation has become increasingly active since OpenAI introduced the Sora model a month ago. Following the emergence of their respective AI models, two DeepMind alums, Yishu Miao and Ziyu Wang, have made their video-generation tool Haiper available to the public.

Miao, a former member of the Global Trust & Safety team at TikTok, and Wang, a former research scientist at both DeepMind and Google, commenced operations for the organization in 2021 and achieved official incorporation status in 2022.

Utilizing their knowledge of machine learning, the two individuals initiated work with neural networks to solve the problem of 3D reconstruction. Miao stated on a call with TechCrunch that, subsequent to training on video data, they discovered that video generation was a more intriguing problem than 3D reconstruction. Approximately six months ago, Haiper shifted its focus to video generation as a result.

Video-generation service

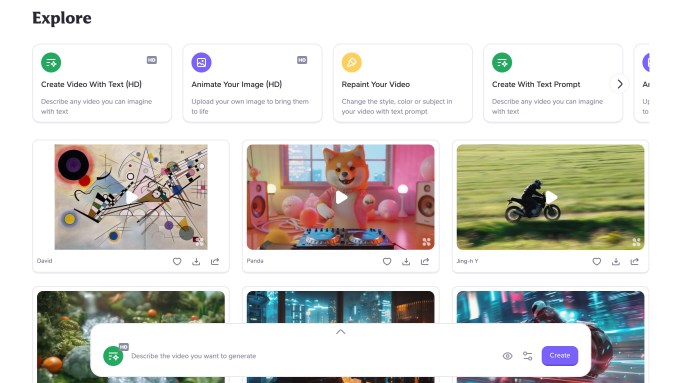

Users have the opportunity to generate videos for free on Haiper’s website by inputting text prompts. Nevertheless, it is important to note that there are specific constraints in place: the maximum video duration available is two seconds in HD, and a four-second video of slightly inferior quality is also possible.

Additionally, the website provides functionalities such as image animation and video repainting in alternative styles. In addition, efforts are underway to implement features such as the capacity to extend videos.

According to Miao, the organization’s objective is to maintain the free status of these features with the intention of fostering a sense of community. He stated that it is “too early” in the voyage of the venture to consider developing a video generation subscription product. Nevertheless, it has engaged in partnerships with organizations such as JD.com in order to investigate potential commercial applications.

A sample video was generated using one of the original Sora prompts: “As several enormous wooly mammoths approach, they traverse a snowy meadow, their long wooly fur gently caressing in the wind as they move. In the distance, there are snow-covered trees and dramatic snow-capped mountains. The mid-afternoon light illuminates the scene with wispy clouds and a distant sun that emits a warm glow. The low camera view is visually stunning, capturing the magnificent depth of field while capturing the large furry mammoth.”

Building a core video model

While concentrating on its consumer-facing website at the moment, Haiper intends to develop a fundamental video-generation model that may be made available to third parties. The company has not disclosed any information regarding the model.

Miao disclosed that it has contacted a number of developers in private to invite them to test its confidential API. He anticipates that developer feedback will be crucial due to the rapid iteration of the model by the company. Additionally, in the future, Haiper has considered open-sourcing its models to enable users to investigate a variety of use cases.

The CEO believes that resolving the uncanny valley problem, which occurs when people see AI-generated humanoid figures and induces unsettling emotions, is crucial at this time in video generation.

“Our focus is not on resolving issues related to content and style; rather, we are attempting to resolve fundamental concerns such as the appearance of AI-generated humans while walking or snow falling,” he explained.

Presently employing approximately twenty individuals, the organization is actively recruiting for a variety of engineering and marketing positions.

Competition ahead

Currently, OpenAI’s Sora, which was just released, is arguably the most well-known competitor to Haiper. However, other competitors include Google and Runway, which is supported by Nvidia and has raised over $230 million in funding.Additionally, Meta and Google each have their own video-generation models. Stability AI introduced the Stable Diffusion Video model in a research preview last year.

Rebecca Hunt, a partner at Octopus Ventures, believes that for Haiper to accomplish differentiation in this market within the next three years, it will be necessary to develop a robust video-generation model.

“Realistically, only a small number of individuals possess the capability to accomplish this; this was one of the rationales behind our decision to support the Haiper team.” She wrote via email to TechCrunch, “Once the models surpass the uncanny valley and accurately represent the physics of the real world, there will be a time when the applications are limitless.”

Despite the fact that investors are interested in funding video-generation ventures propelled by artificial intelligence, they believe the technology has considerable room for development.

“The level of AI video appears to be GPT-2.” There is still a long way to go before these products are utilized on a daily basis by ordinary consumers, despite significant progress made over the past year. When will the era of ChatGPT for video come into existence? Justine Moore of a16z commented last year.